from keras.datasets import boston_housing

import numpyprint(numpy.shape(boston_housing.load_data()))

(train_data, train_labels), (test_data, test_labels) = boston_housing.load_data()

print(len(train_data))

print(len(train_labels))

print(len(test_data))

print(len(test_labels))

print(numpy.shape(train_data))

print(numpy.shape(train_labels))

print(numpy.shape(test_data))

print(numpy.shape(test_labels))

print(train_labels[:10])

train_data = (train_data - train_data.mean(axis=0)) / train_data.std(axis=0)

test_data = (test_data - train_data.mean(axis=0)) / train_data.std(axis=0)

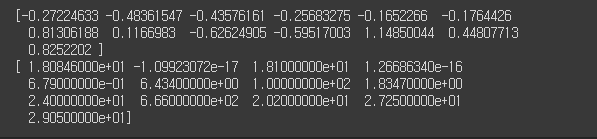

print(train_data[0])

#-1 ~ +1 까지데이터 정규화

print(test_data[0])

from keras import models

from keras import layers

def build_model() :

model = models.Sequential()

model.add(layers.Dense(64, activation='relu', input_shape = (train_data.shape[1],)))

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(1))

model.compile(optimizer = 'rmsprop', loss = 'mse', metrics = ['mae'])

return model이 네트워크의 마지막 층은 하나의 유닛을 가지고 있고 활성화 함수가 없음.(선형 층).

전형적인 스칼라 회귀(하나의 연속적인 값을 예측하는 회귀)를 위한 구성.

마지막 층이 순수한 선형이므로 네트워크가 어떤 범위의 값이라도 예측하도록 자유롭게 학습됌.

이 모델은 mse 손실 함수를 사용하여 컴파일함. 평균 제곱 오차(mean squared error)의 약어로 예측과 타깃 사이 거리의 제곱. 회귀 문제에서 널리 사용되는 손실 함수.

훈련하는 동안 모니터링을 위해 새로운 지표인 평균 절대 오차(Mean Absolute Error, MAE)를 측정. 이는 예측과 타깃 사이 거리의 절댓값. 예를 들어 이 예제에서 MAE가 0.5면 예측이 평균적으로 500달러 정도 차이가 난다는 뜻.

true flase 같은 문제가 아니니까 accuracy 안씀.

k =4

num_val_sample = len(train_data) //k

num_epochs = 100

all_scores = []

for i in range(k) :

print("처리중인 폴드 : ", i)

val_data = train_data[i*num_val_sample : (i+1) * num_val_sample]

val_targets = train_labels[i*num_val_sample : (i+1) * num_val_sample]

partial_train_data = numpy.concatenate([train_data[ : i*num_val_sample], train_data[(i+1) * num_val_sample : ]], axis = 0)

partial_train_targets = numpy.concatenate([train_labels[ : i*num_val_sample], train_labels[(i+1) * num_val_sample : ]], axis = 0)

model = build_model()

model.fit(partial_train_data, partial_train_targets, epochs = num_epochs, batch_size=1, verbose =1)

val_mse, val_mae = model.evaluate(val_data, val_targets, verbose=1)

all_scores.append(val_mae)

여기서 concatenate는 배열 연결해주는 것.

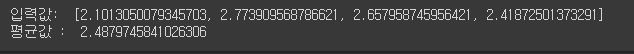

print("입력값: ",all_scores)

numpy.mean(all_scores)

print("평균값 : " ,numpy.mean(all_scores))

#에포크 500으로 다시 설정

k =4

num_val_sample = len(train_data) //k

num_epochs = 500

all_mae_history = []

for i in range(k) :

print("처리중인 폴드 : ", i)

val_data = train_data[i*num_val_sample : (i+1) * num_val_sample]

val_targets = train_labels[i*num_val_sample : (i+1) * num_val_sample]

partial_train_data = numpy.concatenate([train_data[ : i*num_val_sample], train_data[(i+1) * num_val_sample : ]], axis = 0)

partial_train_targets = numpy.concatenate([train_labels[ : i*num_val_sample], train_labels[(i+1) * num_val_sample : ]], axis = 0)

model = build_model()

history = model.fit(partial_train_data, partial_train_targets, epochs = num_epochs, batch_size=1, verbose =1,validation_data=(val_data,val_targets))

mae_history = history.history['val_mae']

all_mae_history.append(mae_history)

print(history.history.keys())

average_mae_history = [numpy.mean([x[i] for x in all_mae_history]) for i in range(num_epochs)]

import matplotlib.pyplot as plt

plt.plot(range(1, len(average_mae_history) + 1), average_mae_history)

plt.xlabel('Epochs')

plt.ylabel('Validation MAE')

plt.show()

def smooth_curve(points, factor=0.9):

smoothed_points = []

for point in points:

if smoothed_points:

previous = smoothed_points[-1]

smoothed_points.append(previous * factor + point * (1 - factor))

else:

smoothed_points.append(point)

return smoothed_points

smooth_mae_history = smooth_curve(average_mae_history[10:])

plt.plot(range(1, len(smooth_mae_history) + 1), smooth_mae_history)

plt.xlabel('Epochs')

plt.ylabel('Validation MAE')

plt.show()

test_mse_score, test_mae_score = model.evaluate(test_data, test_labels)

print(test_mse_score)

print(test_mae_score)

728x90

반응형